Yesterday, after having spent many frustrating hours trying to resolve a problem with a VM I have had handed off to me, I tweeted “Wow. Cpanel is awful. O_O”. The developers, rightly, were not pleased to hear this and invited me to share with them my issues. As a strong – some might say annoyingly persistent – advocate of direct engineering/developer engagement with end users, and of the customer advocacy approach to community engagement, it would be hypocritical of me to ignore this request. Whatever I may think of Cpanel the application, developers reaching out to people they don’t follow on Twitter who evidence dissatisfaction with their product is exactly the kind of community engagement I believe in. So, rather than simply provide an angry throwaway Tweet, I am going to do something of a teardown of my encounter with Cpanel. What went wrong, what went right, what is a bias on my part and what is clearly broken. The problem environment The VM in question is a CentOS 6.x VM running CPanel 56.0.32. The VM is running on on-premises hardware, so I have console access and thus the ability to effect maintenance outside of the CPanel interface. The VM is running a VMware infrastructure, and did not appear to have VMware tools installed when I got hold of it. (This has now been remedied.) The VM has 4GB of RAM, a pair of vCPUs, and a 40GB drive that is roughly 60% full. The system is used primarily for hosting a small ecommerce site and its associated database. The system was configured by a third party and handed off to a client of mine. The client configured basic items, such as e-mail addresses, in case something goes sideways on the system. The system...

Beyond the traditional storage gateway

posted by Trevor Pott

Bringing together all storage into a single point of management, wherever it resides, has long been a dream for storage administrators. Many attempts have been made to make this possible, but each has in some way fallen short. Some of the problem is that previous vendors just haven’t dreamed big enough. A traditional storage gateway is designed to take multiple existing storage devices and present them as a single whole. Given that storage gateways are a (relatively) new concept, all too often the only storage devices supported would be enterprise favourites, such as EMC and NetApp. Startups love to target only the enterprise. Around the same time as storage gateways started emerging, however, the storage landscape exploded. EMC, Netapp, Dell, HP, and IBM slowly lost market share to the ominous “other” category. Facebook and other hyperscale giants popularized the idea of whitebox storage and shortly thereafter Supermicro became a $2 Billion per year company. As hyperscale talent and experience expanded more and more, public cloud storage providers started showing up. Suddenly a storage gateway that served as a means to move workloads between EMC and Netapp, or to bypass the exorbitant prices those companies charged for certain features just wasn’t good enough. Trying to force a marriage between different storage devices on a case-by-case basis isn’t sustainable. Even after the current proliferation of storage startups comes to an end, and the inevitable contraction occurs, there will still be dozens of viable midmarket and higher storage players with hundreds of products between them. No company – startup or not – can possibly hope to code specifically for all of them. Even if, by some miracle, a storage gateway taking this boil-the-ocean approach did manage to bring all relevant storage into their offering one product at a...

Data residency made easy

posted by Trevor Pott

Where does your data live? For most organizations, data locality is as simple as making sure that the data is close to where it is being used. For some, data locality must also factor in geographical location and/or legal description. Regardless of the scope, data locality is a real world concern to an increasing number of organizations. If this is the first time you are encountering the term data locality you are likely asking yourself – quite reasonably – “do I care?” and “should I care?” For a lot of smaller organizations that don’t use public cloud computing or storage, the answer is likely no. Smaller organizations using on-premises IT likely aren’t using storage at scale or doing anything particularly fancy. By default, the storage small IT shops use lives with the workload that uses it. So far so good… except the number of organizations that fall into this category is shrinking rapidly. It is increasingly hard to find someone not using public cloud computing to some extent. Individuals as well as organizations use it for everything from hosted email to Dropbox-like storage to line-of-business applications and more. For the individual, cost and convenience are usually the only considerations. Things get murkier for organizations. The murky legal stuff Whether you run a business, government department, not-for-profit, or other type of organization, at some point you take in customer data. This data can be as simple as name, phone number, and address. It could also be confidential information shared between business partners, credit card information, or other sensitive data. Most organizations in most jurisdictions have a legal duty of care towards customers that requires their data be protected. Some jurisdictions are laxer than others, and some are more stringent with their requirements based on the...

DevOps shouldn’t be a straitjacket

posted by Trevor Pott

The DevOps movement has a dirty little secret it doesn’t want anyone talking about. DevOps isn’t about making you money, or making your life easier. DevOps is about making vendors and consultants money. If you’re lucky and wise you will be able to choose good partners who, in the course of enriching themselves also make your business significantly more efficient. But how to narrow the playing field? The most important thing to do is ask “where does the money go”? Anyone banging on about an IT industry buzzword wants your money. The question is how much do they want and what are they proposing to give you for it? In almost every case you don’t need a consultant to get to DevOps. I can tell you the secret sauce of their advice right here: DevOps is hard to implement. This is not because the tools are difficult, the theory is complicated or the value vague and nebulous. DevOps is hard to implement because it means radical change for many people, many of whom cannot easily see how or why they will still be employed after the process is completed. Fear, and a very human resistance to change are what stand in the way of “implementing DevOps culture”. No consultant can change that. No consultant can do anything to ease people through the process or soothe their fears. Management of the business in question need to recognize the fears of the technologists they employ, engage with them, and provide assurances that everything will be all right once the dust settles. No consultant can do that for management. The best they can do is spend their time trying to serve as a translation layer: explaining to management why the tech staff are reluctant, and explaining to...

VMworld 2015 HOMELAB GIVEAWAY

posted by Josh Folland

VMworld 2015 is coming up fast, and that means CONTESTS! vExperts always get the extra-special treatment, and this year is no exception. Catalogic Software is looking to lead the pack by giving away a complete turnkey VMware homelab kit to one lucky vExpert or NetApp A-Team member. This is a serious prize that has even us at WeBreakTech drooling. What can I say, we’re suckers for flashy hardware! Up for grabs is a complete homelab package, decked out with the following: 1x Cisco SG300-20 managed switch 1x 2U NetApp FAS 2020 3.6TB Filer 2x 2U servers w/ quad-core Intel processor and 32GB DDR4 memory 1x Catalogic ECX perpetual license 1 year VMware VMUG Advantage w/ Eval Experience license $300 to soup up the lab, customize it or to pick up a rack Now that you’re foaming at the mouth, I’m sure the next question on your mind is something along the lines of “shut up and tell me how to sign up already!” Good news is, it’s quite simple and shouldn’t take longer than 10-15 minutes for the savvy vExpert and A-Team nerds. Head over to Catalogic’s contest page here, where you’ll find a selection of questions related to Catalogic’s bread and butter, copy data management. Choose one of them and answer it in 300 words or less in the comments section of that page; or post a meaningful reply to another commenter’s response. Remember to restate the question at the beginning of your comment, or refer to the original post if you’re replying to someone. You’re free to submit as many entries as you’d like – the number of submissions isn’t considered, but rather their individual merit – so you’re still increasing your odds. The contest ends on September 2nd, 2015 and the winner (who...

Supermicro, VSAN and EVO:Rail

posted by Trevor Pott

Supermicro and VMware have released a new generation of VMware EVO:RAIL appliances and VMware Virtual SAN Ready Nodes. The arrival of the new Supermicro servers is occurring alongside the release of VMware’s vSphere 6.0 suite, which is VMware’s most important release in several years. Combined, the Supermicro and VMware launches are more than simply an incremental improvement and serve as an exceptional showcase for the power and flexibility the modern software defined datacenter is capable of delivering. The 2015 generation of Supermicro servers that have been specially designed with VMware’s VSAN and EVO:RAIL in mind are the 2U TwinPro² and the 4U FatTwin series servers. Supermicro’s various partners have led the industry in delivering hyperconverged solutions using previous generations of Twin series servers. Lessons learned there, combined with the increased capability of the next generation of VMware software, have driven the design of the next generation Supermicro Twin series servers. Increments of change A generational change can occur either because a major feature is introduced, or through a series of iterative implements that, combined, result in a significantly superior product. The 2015 Twin series servers have done both. Supermicro hasn’t simply relied on evolving standards and new generations of equipment being made available by upstream partners. The quality of Supermicro servers (which Supermicro feels was already quite good) has been significantly improved. Supermicro is constantly working with customers and partners to identify design elements that need improvement, and the 2015 servers are the result of many such changes. The most notable incremental improvement will be found in the addition of Supermicro’s Titanium Level high-efficiency digital power supplies (more detail below) and architecture designs that improve airflow for optimal cooling. In addition to these green computing improvements, the rail kits have undergone several design tweaks...

Make a #WebScaleWish

posted by Trevor Pott

Today, November 21st, is the last day to submit a non-profit organisation to Nutanix‘s generous #WebScaleWish datacenter makeover bit of “giving back to the community”. Nutanix does one thing – Enterprise class hyperconverged server and storage systems – and it does it well. The #WebScaleWish project is Nutanix’s way of giving back. Instead of simply plowing money into a random charity they’re using their expertise in their chosen field of endeavour to try to make a chosen non-profit more efficient. I’ve spend a lot of time with Nutnaix staffers. They honestly believe that using hyperconverged infrastructures – rather than the more traditional compute node + networked SAN – will streamline IT operations for companies. The result for enterprises is ultimately lower costs. What’s important is how you get to those lower costs. You get there by being able to remove a lot of administrative burdens from systems administrators. This frees those systems administrators to do something more profitable with their time, saving the company money. But let’s look at what Nutanix could do for a small non-profit. KiN I nominated the Kaslo infoNet (KiN) for Nutanix’s #WebScaleWish. KiN is a non-profit dedicated to bringing high speed internet to underserved communities along Kootenay Lake in British Columbia, Canada. On an absolutely shoestring budget they are putting up wireless towers for microwave backhaul and trenching Fibre-to-the-Premises in the town of Kaslo. The local incumbent telcos had shown zero interest in providing usable broadband service to the town of Kaslo or the surrounding area. The towns along Kootenay lake have been struggling for years because of this; they struggle attract top talent – or top tourists – because of the abysmal state of telecommunications in the area. Beyond economic concerns, the KiN project promises to prove transformative...

Quaddra provides Storage Insight

posted by Aaron Milne

Knowledge is power, and Quaddra Software aims to empower customers by giving them knowledge of their unstructured storage. Quaddra was founded by storage and computer science experts Rupak Majumdar, Jeffrey Fischer, and John Howarth, and their first product, Storage Insight, breaks new ground in the storage management and analytics market. So what is Storage Insight and how does it differ from other market offerings? First and foremost Storage Insight isn’t a cloud storage solution or a cloud storage gateway. It is high performance, highly-scalable file analytics software designed to give storage and systems administrators the information they need to manage unstructured data files. As you can see from figure 1 above, by providing your teams with the information they need, Storage Insight can reduce the time and money involved in making decisions about your storage assets. One of the ways that Storage Insight does this is by providing a clear and concise breakdown of the files in your existing unstructured storage. Storage Insight can assist your team in eliminating cold files from your expensive ‘hot’ storage. The category and filetype breakdowns also help you quickly and easily identify inappropriate use of storage assets. So how does Storage Insight work? Unlike traditional storage management, analytics and archival products, Storage Insight doesn’t need to ingest your data to work with it. Instead it comes as a VMware + Ubuntu virtual appliance that integrates into your current environment. Storage Insight has pre-built modules that allow it to work with standard file storage architectures like NFS and CIFS, and has the ability to add plug-in modules for other types of storage if it isn’t one of the standard architectures. Even better though, Storage Insight doesn’t just integrate with physical, hardware-based storage. In a first for storage management and...

It’s time to stop tech industry FUD

posted by Trevor Pott

In the year 1975 Gene Amdahl left IBM to found his own company, Amdahl Corp. IBM waged a vicious marketing campaign against Amdahl that ultimately caused Amdahl to define FUD as it is used in its modern context: “FUD is the fear, uncertainty, and doubt that IBM sales people instill in the minds of potential customers who might be considering Amdahl products.” The technology industry took the basic concept of argumentum in terrorem and refined it into its purest essence. Fear, Uncertainty and Doubt is the carefully calculated and malicious practice of using misinformation to generate doubt, and ultimately fear, in order to sell a product. FUD can be used at the level of an individual product, a feature, or wielded as a blunt cudgel against an entire company. It is used by individuals as part of social media interactions with thought influencers. It is used when talking to the media. Most heinously, it is used by sales people as part of high pressure sales tactics when selling to the end user. Often times, a technical document will need to exist that says “here are things we feel that existing solutions do wrong, and so our altered approach does them this way instead.” This is, however, worlds apart from integrating negative selling, innuendo and outright attacks directly into sales and marketing from the outset. The line between fact and FUD can be blurry. If someone asks you for your honest opinion on a competitor, and for facts to back it up, responding honestly isn’t spreading FUD. It’s answering a direct question honestly. What sets the honourable salesman apart is that they do not seek to instill fear, uncertainty or doubt or incorporate it as part of their regular sales pitch. This is what the clean fight...

It’s time to have “the talk” about wireless

posted by Trevor Pott

Everyone gather ’round, it’s time to have “the talk” about wireless. I don’t mean the birds and the bees, even though wireless standards do seem to reproduce at alarming rates. No, what needs discussion is the part where wireless throughput claims are an obvious pack of lies. More importantly, how does this marketing malarkey affect the real-world business of supporting users in an increasingly mobile world? Let’s start off with a VMworld anecdote. While anecdotal evidence isn’t worth much, it’s illustrative of the actual theory behind how wireless networks work, so it’s worth bringing into the discussion. VMworld 2014 kicked off on Sunday, August 24th. Despite this, the wireless network outside the main “Solutions Exchange” convention hall was up and running the Friday before. On Friday, with only a handful of nerds clustered outside the Solutions Exchange it was entirely possible to pull down 4MiB/sec worth of traffic from the internet. Some things were clearly being traffic managed, as wrapping up the traffic in a VPN tunnel could increase throughput by up to 10x. Saturday was more crowded. 100 nerds become 1000, and 4MiB/sec became a fairly consistent 500KiB/sec. Still entirely useful for web browsing, but pulling down 100GB VMDKs from my FTP back home suddenly got a lot harder. By early Sunday one critical element had changed; where Friday and Saturday say maybe 3 different access points scannable from outside the Solutions Exchange, suddenly there were over 100. Almost all of them MiFi hotspots, or smartphones that had had their hotspot feature enabled. Sunday morning still saw only about 1000 devices vying for access outside the Solutions Exchange, but all these additional MiFi points had turned the 2.4Ghz spectrum into a completely useless soup. A consistent 500 KiB/sec became an erratic, spiky mess...

Storage wars: leveling the playing field.

posted by Trevor Pott

The storage industry is going through its first truly major upheaval since the introduction of centralised storage. Enterprises have years – and millions of dollars – worth of investment in existing fibre channel infrastructure, most of which is underutilised. Novel storage paradigms are being introduced into markets of all sizes. The storage industry is in flux and buying a little time to correctly pick winners could save enterprises millions. Information technology is always changing. Calling any influx of novelty a “major upheaval” is easy to dismiss as overstatement of hype. Deduplication and/or compression were reasonably big deals that came out long after centralised storage, so what makes the current brouhaha so special? The difference is one of “product” versus “feature”. Deduplication or compression were never going to be products in and of themselves for particularly long. It was always destined to evolve into a feature that everyone offered. Today’s storage shakeup is different. Server SANs can do away with the need for centralised storage altogether, threatening to turn enterprise-class storage itself into a feature, not a product. Host-based caching companies are emerging with offerings that range from creating an entirely new, additional layer of storage in your datacenter to injecting themselves into your existing storage fabric without requiring disruption of your network design. We’re at about the halfway point in the storage wars now; the big “new ideas” have all been run up the flag pole and there are a dozen startups fighting the majors to be the best at each of the various idea categories. The “hearts and minds” portion of the war is well underway and that give us a few years until there’s some major shake-up or consolidation. The big fish will eat the small fish. Some companies will rise, others...

Micron demos all-flash VSAN

posted by Phoummala Schmitt

For their VMworld demo, Micron is taking VSAN to a whole new level by creating an all flash VSAN. The thought process behind this is to demonstrate “best in class” performance of a VSAN configuration. Eliminating the storage bottlenecks on the data store by using 100% solid state storage and the latest high speed network interconnects, Micron is aiming for performance that can push the limits of storage. Micron’s all flash VSAN configuration includes a 6 node cluster of Dell R610, Dual 2.7Ghz 12 core Xeon v2 CPU, 10 x 960GB Micron M500 SSD for the data storage, 2 x 1.4TB HHHL Micron P420m PCIe SSDs for the VSAN cache, and 768GB RAM, configured into 2 disk groups. Each disk group consists of 1x P420 and 5 x M500 drives. The M500 SSD drives are rated up to 80,000/80,000 random read/write IOPS each. The PCIe P420m is rated at read 750,000 IOPS with write IOPS at 95,000. With these kind of statistics, expect to see some great throughput. The end result is 9.4TB of storage space and 1.9TB of read cache, which results into a 1:5 cache to data ratio. (The unrepresented cache space is used for the write buffer which is 840GB for each host.) The VMworld demo has Active Directory and VMs running various applications – including high-IOPS-consuming Microsoft SQL server and backups using Veeam. The VDI deployment on the VSAN showcases what kind of performance can be achieved when storage bottlenecks are removed. The all flash VSAN is not a fully supported configuration at the moment; however, when VMware does support it Micron will be ready. If you are at VMworld San Fransisco, stop by the Micron booth to see the demo and what kind of high performance VSAN you can...

Is Now A Good Time To Replace CIRA?

posted by Josh Folland

EasyDNS CEO Mark Jeftovic recently sent a letter to the Canadian Minister of Industry regarding the Canadian Internet Registration Authority (CIRA)’s new positioning to enter the managed DNS space. “They have obtained an outside legal opinion to discern whether CIRA could be construed in violation of the Competition Act with respect to this proposed expansion of their core mission. I imagine the concern is that since CIRA is, as we all know, a monopoly with respect to the registration of .CA domain names, and that managed DNS services are something often provided by domain registrars, there may be a sentiment that CIRA could be abusing their monopoly position by going in and competing against their own registrars’ business interests: “with respect .. to the contemplated Managed DNS Service, as well as the application of the Act to CIRA, in general. External counsel was of the opinion that CIRA’s risk under the Act with respect to the offering of the Managed DNS Service was low. CIRA’s own assessment of the potential risk of claims under the Act for non-compliance was overall low” Based on this knowledge, which Mark acquired via minutes of board meetings published on the CIRA website, he proposes that now is a good time for Industry Canada to re-examine the role of CIRA. His goal is not to inspire new legislation that protects companies like EasyDNS from the CIRA’s potential move, but merely opening operation of the registry towards true competition. Because of the CIRA’s extant monopoly on .ca domain registrations, it would be unfair to businesses who have to justify their existence to the market every day. “Thus we are suggesting that if CIRA has “grown up” and is ready to venture forth in the world and compete against private businesses, then the...

Unsatisfactory Responses

posted by Aaron Milne

Sysadmin Day is coming (much like winter). Be nice to your IT staff. Plan a party, eat some cake etc. For those of you deep in the trenches, finish early and go home and see your loved ones. I’ve worked with SMEs for a long time now. My friends know this and so occasionally one of them gets in contact with me to ask a question. This happened on the weekend and I thought I’d take the time to share my thoughts publicly on what I was asked. First of all, I’d like to apologise because this really isn’t a nice post. There’s no easy way to deal with the situation that this friend finds himself in. My friend has asked for anonymity and I’ve provided it; I’ll only be referring to him as Frustrated to protect him from any possible backlash and I won’t name their employer either. Here is their e-mail to me: Aaron, I changed jobs late last year just like you did. The wife and I wanted a sea change so I’ve gone from working with a large enterprise to providing support for a number of SME’s. Its hugely different and a big challenge but I’ve been finding it worthwhile nonetheless. As you know every year in October the wife and I always take a Holiday. Its tradition. I proposed to her on this holiday (redacted) years ago so it means a lot to us. This year I’m in a bit of a bind. About a month ago, one of the guys quit and they haven’t found someone to replace him yet. I’ve been doing his job and mine for the last month and the hours are starting to mount. [Redacted] was supportive at first however she’s starting to get upset at the long hours...

TechEd North America – The Closing Line

posted by Adam Fowler

TechEd North America 2014 is now over. You can read about the first two days of my experience here. The second half wasn’t too different to the first half unsurprisingly, and there wasn’t a huge amount of excitement in the air. Wednesday morning started off slowly. There were a LOT of vendor parties on the Tuesday night beforehand, so maybe it was a difficult morning for many attendees. There wasn’t much to do as once breakfast was over, there were breakout sessions to attend (where you go into a room and listen to a presentation – one of the biggest parts of TechEd), but the Expo hall (where all the vendor booth are) didn’t open until 11am. I found it difficult to push myself to attend the breakout sessions because they were all available on the next day for free via their Channel 9 service. It’s a great idea from Microsoft but many attendees I spoke to shared the lackluster of going to these too, saying they could watch them online later. There were some highlights of sessions though. Anything with Mark Russinovich (creater of SysInternals) was highly talked about, and I attended “Case of the Unexplained: Troubleshooting with Mark Russinovich” which was really interesting to watch. For lunch, I caught up with Nutanix to have a look at their offering over lunch. They treated me in style, by giving me a Texas-style hat and using someone else’s leg power to get me there and back: I learnt that Nutanix offer a well priced sever based solution that’s half way between a single rackmount server, and a full chassis/blade setup that also uses shared storage between the nodes (i.e. blade servers). I’ll definitely be looking into that further from both a writing view as well as investigating for...

TechEd North America – Half Way Mark

posted by Adam Fowler

It’s now Wednesday 14th May, and we’re at the half way mark of TechEd North America 2014. This is my first TechEd outside of Australia, and it’s been an interesting experience. A lot of the following reflections will be due to my TechEd Australia exposure which gave me certain expectations. For starters, the community is really a great group. Almost everyone is very courteous and respectful which is inviting and welcoming to someone who’s traveled here by themselves. It’s very easy to just start talking to someone, as everyone seems genuinely interested to find out more about others and have a chat. For example, as I was sitting writing this, someone mentioned that I should eat something as I hadn’t really eaten much of it. We had a quick chat about jetlag, and I thanked him for his concern. I’ve been told it’s a sold out event, with about 11,000 people in attendance, which dwarf’s Australia’s 2000-3000 headcount. The venue itself, the Houston Convention Center is huge, along with all the areas inside. The general dining area looks bigger than a soccer field to me. The Expo area is about as big, which contains all the vendors giving away shirts, pens and strange plastic items, while trying to convince you to know more about their products. The staff are quite nice too, not being too pushy. There’s also a yo-yo professional, a magician and probably other novelties that I’ve not seen yet. Many competitions are going on with the vendors too. One had a chance to go bowling with Steve Wozniak and I was standing next to him which was awesome. Sadly didn’t win the bowling part though: There’s a motorbike to win, countless Microsoft Surfaces, headphones and other bits and pieces that vendors...

TechEd North America keynote liveblog

posted by Trevor Pott

Adam Fowler has flown from Adelaide, Australia to Houston, USA in order to bring you the up-to-the-minute information from the TechEd North America keynote. Join us for the latest Microsoft news as it...

Windows Phone 8.1 Is Out!

posted by Adam Fowler

The Windows Phone 8.1 OS update is now available to ‘Developers’. In this case, a developer is anyone who downloads and briefly sets up the Preview for Developers app and then runs a phone update. This is actually a decent way to let people who are happy to play with an update do so, before released to the general public. The upgrade process isn’t very exciting, but can take a while. On my Nokia Lumia 1020, there was a small 2mb update which took about 10 minutes to install, before the big 8.1 (of unknown size) update which took about 25 minutes. A very smooth process but don’t do this when you’re expecting a call. After upgrading I had a headache, but that was unrelated. Overcoming this, I was looking at a phone that had many obvious improvements. 8.1 is a BIG update, and here’s some of the more interesting bits I found: Screenshots – The button combination used to be Power and Start, but it’s now Power and Volume Up. They nicely tell you this when you try to take your first screenshot (say if you were writing an article): Notification Center – Yes, Microsoft has followed Apple who followed Google. I see this as a standard requirement for a smart phone now, and this notification screen gives you enough information. Yes, mine’s a bit blank but it shows you unread emails, SMSes and so on. It also can take you to the settings page, which for me means one less tile on the start screen. Another handy part of the Notification Center is the ability to turn screen rotation on and off. Now you can control it once you’re in an app, as I usually have it off for lying in bed where gravity...

Windows 8 – Folder In Use

posted by Adam Fowler

Originally posted at adamfowlerit.com Hi, Since using Windows 8, I’ve had continual issues when trying to move folders around. It’s the normal message saying “Folder In Use – The action can’t be completed because the folder or a file in it is open in another program” I’d get this continually, and quite confident that I didn’t have anything actually open. Eventually I’d try again and after a few tries, it would finally move my folder. I was fed up with this, so thought it was about time to work out what was going on. Someone (thanks Barb) reminded me that Process Explorer was a good way to work out what file was open. I ran Process Explorer, moved a few folders until I recreated the error and did a search for the folder that was in use. I found that explorer.exe had the thumbs.db file open, even though I hadn’t navigated inside the folder. Thumbs.db? It’s been around for ages (since Windows 95!) and is a thumbnail cache file used for Windows Explorer’s thumbnail view. This was also deprecated from Windows Vista and above, replaced by a centralised thumbnail location instead of dropping the Thumbs.db files all over your hard drive. The problem is, Vista and above still create the old Thumbs.db by default! Windows 8 seems to be even worse, in that it still creates the file but then keeps it open for a rather long time. After finding this thread on Technet, where I learnt a lot of the above, I enabled the setting “Turn off the caching of thumbnails in hidden thumbs.db files” under > User Configuration > Administrative Templates > Windows Components > File Explorer. After doing this then rebooting, my “Folder In Use” issue seems to have completely cleared. I can understand...

LinkedIn Security/Information Risks with Exchange

posted by Adam Fowler

Originally posted on adamfowlerit.com Today after logging on to LinkedIn, I was greeted with a new screen I found rather worrying. It is commonplace for services like LinkedIn and Facebook to scan through your address book, and ask for credentials to do so (which is rather concerning already), but a new option has popped up: This is asking for your work username and password. No 3rd party should be asking for corporate credentials like this, even more so a company that’s been hacked before http://www.pcworld.com/article/257045/6_5m_linkedin_passwords_posted_online_after_apparent_hack.html . I tried this with a test account, entering the username and temporary password. It then asked for further information, which was the address for the Outlook webmail link and then connected and started showing contacts. LinkedIn on this page says “We’ll import your address book to suggest connections and help you manage your contacts. And we won’t store your password or email anyone without your permission.” which is a start, but it’s just such a bad practise to get into, and encouraging people to do this is irresponsible of LinkedIn in my opinion. On top of this, it’s providing an easy mechanism for staff to mass extract their contacts outside the company, which many companies frown upon or even have strict policies in place. You can’t stop people from entering in these details of course, but you can block the connection from working at the Exchange end, as long as you have at least Exchange 2010 SP1. There are a few settings to check. First, under the Set-OrganizationConfig area, you’ll need to check that EwsApplicationAccessPolicy is set to ‘EnforceBlockList’. If it’s not, it’s going to be “EnforceAllowList” and you’re probably OK, as it’s using a whitelist for access to only what’s listed rather than a blacklist, to only block...

Disabling the Windows Update Framework

posted by Aaron Milne

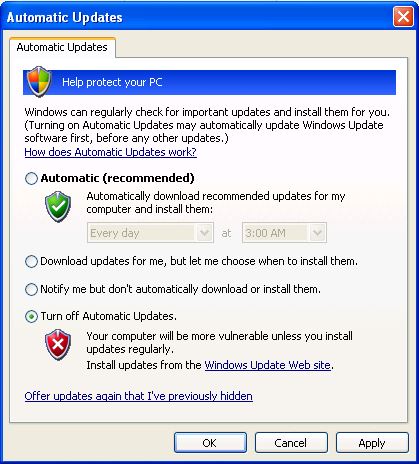

Over the coming week(s) I may (time permitting) post some how to’s on the ways I’ve gone about securing the systems of several clients that are still forced to XP for various reasons. First things first. If you aren’t already using it, do yourself a favour and grab WSUS Offline 9.1 from here. It’s important that you don’t grab anything newer than 9.1 as this is the last version of the tool that will support both Windows XP and Office 2003. Once you’ve got it you should then go and make yourself a gold master copy of the windows XP updates. This will mean that should the worst happen and you do have to actually reinstall from a disc or barebones VM, you won’t have to rely on the shitty Windows Update website/service. For bonus points, once you’ve installed all the remaining updates for Windows XP and Office 2003 go right ahead and disable the forsaken piece of dreck that is Windows Update in XP. You’ll not only make it more difficult for any malware that requires the service from getting a toehold in your system, but you should get a tidy little speed boost as windows won’t try to load it during boot, and won’t randomly check for updates as well. Just in case you aren’t sure how to go about disbaling Windows Update in XP I’ve produced a handy little guide to help you do just that… How to disable Windows Update in Windows XP in five easy steps: Disabling Windows Update in Windows XP is actually quite easy. NB. Make sure you have all available updates for Windows XP and (if you are still using it) Office 2003. Please also ensure that you are NOT relying on Microsoft Security Essentials as your...

How To Change IE10’s Default Search Engine

posted by Adam Fowler

Originally posted on adamfowlerit.com Automating the change of Internet Explorer 10’s default search engine from Bing to Google shouldn’t be a difficult task, but it is. I’ll first cover what we’re trying to automate, then the possible options on how to do it. I found a lot of misinformation online when doing this too which was surprising, I’ll add notes in around what I found on that too. Brief instructions are down the bottom if you just want to know what to do! To do this manually on an individual PC, you need to do two things. Install the Search Provider addon, and then set it as the default. The first part can be done by going to the iegallery website and finding an Add-on, for Google Search you can go here: http://www.iegallery.com/en-us/Addons/Details/813 and click the big ‘Add to Internet Explorer’ button. Setting it as the default is possible from the popup when clicking the button, or going into your Add-ons and ticking the right search engine as your default. Google provides some very basic instructions here https://support.google.com/websearch/answer/464?hl=en which are: Internet Explorer 10 Click the Gear icon in the top right corner of the browser window. Select Manage add-ons. Select Search Providers. In the bottom left corner of your screen, click Find more search providers. Select Google. Click the Add to Internet Explorer button. When the window appears, check the box next to Make this my default search provider. Click Add. So far this is incredibly simple! If you were starting from scratch, you can package up IE10 using the Internet Explorer Administration Kit (IEAK) and add in extra search engines as well as specify the default. There’s a good guide at 4syops here http://4sysops.com/archives/internet-explorer-10-administration-part-4-ieak-10/ which covers this, but doesn’t help you if PCs already have IE10, or will get it via other means (e.g....

Media Player Quest

posted by Adam Fowler

For the last several years, I’ve been on a quest. A quest that has finally been completed. I can’t remember exactly when it first started, but I remember a happy time. I owned a modded Xbox (the original!) and it had a media player installed on it. It was called XBMC which aptly stood for XBox Media Center. It was an absolute delight to use. My gaming machine became my lounge room media player. It connected to my TV via S-Video as that was slightly higher quality than Composite video, it had a 100mbit Ethernet port so I could steam media from a PC in another room. It supported SMB file shares which meant no client was required on my Microsoft Windows PC, it just had credentials to navigate through folders and play the videos I wanted. The navigation of the software itself was quick and smooth. I could quickly jump to any point on a video, or fast forward and rewind with ease. I could even easily adjust the sync of the audio and video if my source was out of sync. There was even an official Xbox Remote and IR Sensor that worked brilliantly with the setup, so no death trap cable was running across the living room (unlike the network cable, but that’s another story). This delightful time ended eventually. Higher resolution TVs came out with their fancy new standard connection – HDMI. The Xbox was cast to the side, as a full tower PC took it’s place. Windows Explorer along with a keyboard and mouse was the easiest thing to use to navigate and play files. A VGA cable simply connected the PC to the new TV and supported 1920 x 1080. Sure, lots of the media I actually watched was still nowhere near that...

Windows One to offer hope to XP holdouts

posted by Trevor Pott

Microsoft has performed its most amazing U-turn yet. Today’s turnaround will see the creation of the Windows One brand: a cloudy subscription entitling the user to perpetual support for any version of Windows from Windows XP onward, so long as the bill is paid, of course. Relenting under what is being described as “unremitting pressure” from customers and governments alike, Microsoft has committed to an unprecedented licensing change within the next week. At $65 US per system per year, the cost of the subscription equates roughly to the cost of a retail copy of Windows Professional spread out over three years. The move is part of a series of “rapid culture changes” initiated by new CEO Satya Nadella. After careful review of Microsoft’s competitive position and long term strategy, Nadella has decided that the issue Microsoft most urgently needs to address is the evaporation of customer trust. Customer trust in Microsoft evaporated occurred under his predecessor Steve Ballmer’s tenure, and Nadella has had no success so far in rebuilding it. Microsoft does not hold a monopoly in many of the markets it is seeking to dominate. Microsoft has powerful competitors for its server technologies and ranges from a virtually irrelevant also-ran in the mobile space to a distant second in the cloud space. “Microsoft is changing gears and re-focusing on customer choice” said Nadella. He continued: “the Windows One subscription is an olive branch to existing Windows XP customers”, allowing them to stick with the aging operating system for as long as they like. Nadella hopes that this “olive branch” can convince customers that Microsoft has changed under his leadership. He is determined to show that Microsoft is aiming to strengthen bonds with partners, developers and customers. It remains to be seen if this...

Unable to Map Drives from Windows 8 and Server 2012

posted by Adam Fowler

Originally posted at adamfowlerit.com I came across this issue recently and thought it was worth sharing. From a Windows 8 machine, trying to map drives to either Windows Server 2003 or Windows Server 2008 and failing. It was just the generic ‘Windows cannot access *blah*” but the details had ‘System error 2148073478’. Some googling found this Microsoft Support article: http://support.microsoft.com/kb/2686098 First, this only talks about 3rd party SMB v2 file servers which is a bit strange, but applying this client fix fixed it on an individual basis: Disable “Secure Negotiate” on the client. You can do this using PowerShell on a Windows Server 2012 or Windows 8 client, using the command: Set-ItemProperty -Path “HKLM:\SYSTEM\CurrentControlSet\Services\LanmanWorkstation\Parameters” RequireSecureNegotiate -Value 0 -Force Note: If you get a long access denied error, try running Windows PowerShell as an Administrator. Fixes it, but not ideal. A better solution may be to disable SMB signing on the particular server you’re connecting to. The next set of instructions are fromExinda: http://support.exinda.com/topic/how-to-disable-smb-signing-on-windows-servers-to-improve-smb-performance To disable SMB signing on the Windows Server 2000 and 2003 perform the following: Start the Registry Editor (regedit.exe). Move to HKEY_LOCAL_MACHINE\System\CurrentControlSet\Services\LanManServer\Parameters. From the Edit menu select New – DWORD value. Add the following two values EnableSecuritySignature and RequireSecuritySignature if they do not exist. You should set to 0 for disable (the default) or 1 to enable. Enabling EnableSecuritySignature means if the client also has SMB signing enabled then that is the preferred communication method, but setting RequireSecuritySignature to enabled means SMB signing MUST be used and so if the client is not SMB signature enabled then communication will fail. Close the registry editor. Shut down and restart Windows NT. In addition, default Domain Controller Security Policies may also force these values to “enabled” on Windows Servers. On Windows 2003 Servers, open Domain Controller Security...

Troubleshooting NIC Drivers in WinPE for SCCM 2012

posted by Adam Fowler

Originally posted at adamfowlerit.com This is one of the problems that every SCCM (System Center Configuration Manager) admin will come across. You’re trying to deploy an image to a PC from PXE booting, and you can’t get the list of task sequences to show up. The PC will reboot, and you’ll wonder what happened. There’s several different ways to troubleshoot this, but it’s most likely network card drivers required in your Boot Image in SCCM. Where do you start on this though? There’s a couple of things to enable/set to make it a little easier. First, enable command support on both your x86 and x64 boot images (Software Library > Overview > Operating Systems > Boot Images). This will allow you to press F8 when running WinPE from a task sequence, which brings up a command prompt to let you check things like log files. The other setting I recommend is making custom Windows PE backgrounds (same screen as the command support option). Have one for your 32 bit Boot Image, and a different one for your 64 bit. This means when something fails, you can tell at a glance which boot image was used and troubleshooting accordingly. Back to working out your NIC issue. If the task sequence is bombing out early on, press F8 to get your command prompt, then use the command ‘ipconfig’ If you see hardly any information, including the lack of an IP address then it’s a strong indicator that the correct NIC driver isn’t loaded. I’m going to guess you’ve checked the network cable is plugged in 🙂 To work out what NIC driver is required can be tricky. If your hardware came with an OS already loaded, or a recovery disk, you can load that up and from...

Practical Group Policy

posted by Adam Fowler

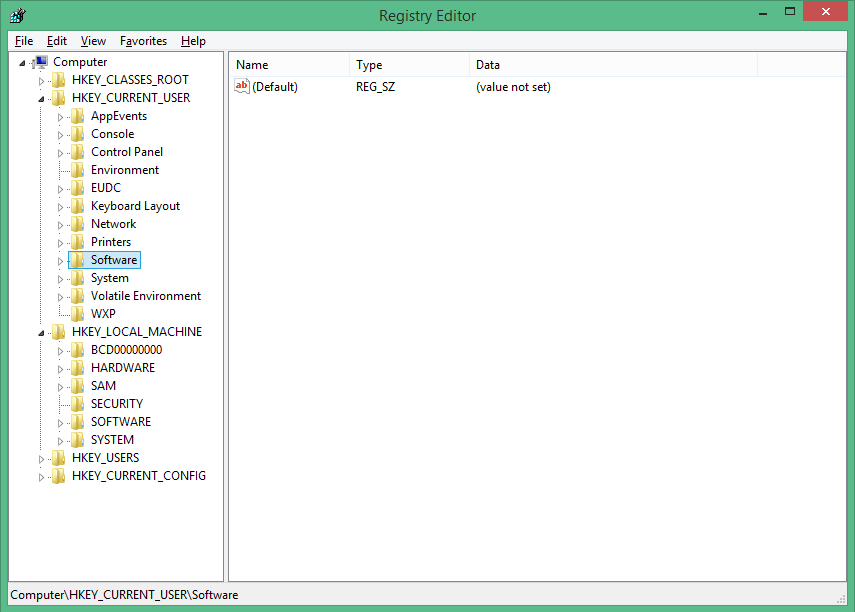

Normally when you think of Windows Registry, you’re normally worried about the two sections: HKEY_LOCAL_MACHINE (HKLM) and HKEY_CURRENT_USER (HKCU). It’s fairly obvious that settings under each area apply to either the PC itself (machine) or just to the currently logged in user. This is usually fine, but there are scenarios where there’s a setting that will only apply to a machine due to how the program is written, but you actually want to turn it on or off based on the logged on user. With Group Policy Preferences (GPP) which was introduced with Windows Server 2008, this is much easier to do. Before this, you would have need to have written complex logon scripts using 3rd party tools to perform lookup commands, create variables and then adjust the registry accordingly, while providing administrator credentials. GPP lets you apply registry settings rather easily. One of the main benefits of GPP is how flexible and granular you can be with the settings you apply. This is how I would normally use to deploy a setting, but have it easily managable: Have two settings for the registry, one setting it on and the other off (normally done by a 1 for on, 0 for off but it depends on the setting). The targeting for having the setting on or off is based by user membership to an Active Directory (AD) group, but the setting is not applied in the user context meaning it’s applied by ‘System’ which will have full access to the HKLM registry. This will then mean the HKLM setting changes from 0 to 1 and back based on which user logs in! I prefer this than just applying particular users individually to the item because it will reduce processing time having a single check...

Getting AD User Data via PowerShell

posted by Adam Fowler

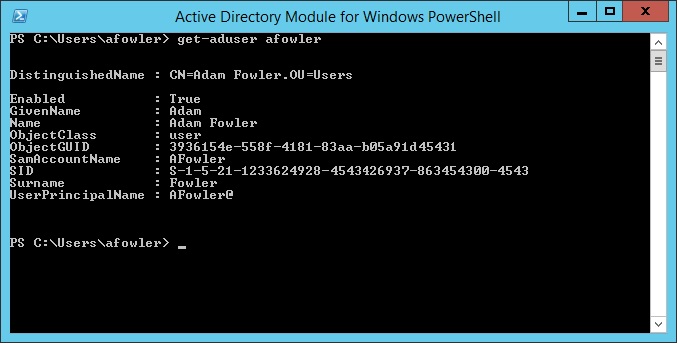

It’s a common question asked of IT – “Can you give me a list of who’s in Marketing?” or “How many accounts do we actually have?” Before PowerShell, this was a lot harder to do. There were companies like Quest Software who provided several handy tools (and still do) , or long complicated visual basic scripts. So, how do you get a list of users? All of this is being done from the Active Directory Module for Windows PowerShell which will install as part of the Windows Server 2012 Feature – Role Administration Tools > AD DS and AD LDS Tools > Active Directory Module for Windows PowerShell. The ‘Get-ADUser’ command is what we’ll use to demonstrate what you can do. For starters, ‘Get-ADUser -filter*’ will get you a list of all users, and they’ll output in this format one after the other: A lot of information. You can specify a single user with: Get-ADUser -identity username which will just show you the one result. As you may be aware, there are a lot more fields a user has than just the ones shown. You can tell PowerShell to show you all the properties by modifying the command like this: Get-ADUser -identity username -properties * Note that in PowerShell v4 if you get the error “get-aduser : One or more properties are invalid.” then there may be an issue with your schema. Check out this post for more information. If there’s just one extra property you need, there’s no point getting everything, so if you needed to see a field such as “Department” for all users then adjust the command like this: Get-ADUser -filter * -properties Department Now, this gives the results for every single user in your Active Directory environment. You can narrow this down to a particular...

Diverting Unassigned Numbers in Lync 2010

posted by Adam Fowler

Lync 2010 with Enterprise Voice attaches a phone number to a user with a direct one to one relationship. This means that when a user departs, the account should get disabled along with any Lync attachments. In turn, this abandons the phone number that was attached to the user. All great by design, but what about external people that are still calling the number of the departed user? You could leave the user’s Active Directory account disabled, leave Lync attached and configure it that way, but that’s a big hassle. Microsoft covers this scenario with a feature called “Unassigned Number”. Unassigned Number lets you configure an Announcement on an entire number range. The idea is that if any extension in the number range configured (which can be a single number) is called and isn’t attached to a user, it will divert the call to either an Announcement or an Exchange UM Auto Attendant number. Generally just the Announcement is fine, because the Announcement can be configured to be nothing, but divert the call to wherever you want it to go afterwards. The Announcement needs to be configured before you create an Unassigned Number Range, which can only be managed by PowerShell. The Unassigned Number Range can be created via the Lync Control Panel GUI or PowerShell which seems a bit inconsistent. Creating an Announcement is rather easy still, with a great guide from TechNet here. The important bits are to give your Announcement a name, tell it which Lync Pool to use and give it a target SIP address to send the call to. An example command do this is: New-CsAnnouncement -Identity ApplicationServer:lyncpool.mydomain.com -Name "Forward Announcement" -TargetUri sip:reception@mydomain.com Note that if you use a direct phone number for the sip address, you need to append...

How to opt out of Shared Endorsements

posted by Katherine Gorham

How do you feel about having your mom find out that you gave “Kinky Drinking Games: Spring break edition” a four star review? Or about sharing your positive experience of hemorrhoid ointment with that client you’re trying to impress? What if your strongly pro-life supervisor at work sees your face in an ad for “Everything You Need to Know to Prepare for an Abortion?” Not keen? Google recently updated their Terms of Service to include something called Shared Endorsements, which will go live on November 11. If you have a Google Plus account, Shared Endorsements allow Google to use your name, image, and activity on Google sites to advertise to your family and friends. Google Plus users under eighteen years of age are automatically excluded from Shared Endorsements, but adults need to change their account settings if they don’t want their information used in ads. If you would like to opt out, here’s how: 1) Sign in to your Google Plus account 2) From the “Home” drop-down menu in the top left corner, choose “Settings.” 3) Check to see if Shared Endorsements are on or off. If they are on, click “Edit.” 4) The Shared Endorsements page is long and the actual opt-out is below the fold. Scroll down. You’re looking for this: 5) Make sure the tick box is un-checked, then hit “Save.” 6) A warning message will pop up; hit “Continue.” You’ve opted out. Go plus-one “Rabid Zombie Ferret Fanciers” in (relative)...