Computers are the most awful way to do things, except for all the other ways we’ve tried. It’s easy to blame computers; they don’t fight back. What’s much more difficult, yet distressingly important, is figuring out why computers have done something unappreciated and remedying the situation. One important tool in a systems administrator’s arsenal is Solarwinds’ Virtualization Manager. Humans have a natural tendency to anthropomorphize inanimate objects. Many of us give our cars names, ascribe to them personalities, talk to them and sometimes treat them like members of the family. We similarly ascribe personalities and motivations to individual computers or even entire networks of them, often despite being perfectly aware of the irrationality of this. We can’t help it: anthropomorphizing is part of being human. Computers, however, aren’t human. They don’t have motives and they don’t act without input. They do exactly they are told, and that’s usually the problem. The people telling the computers what to do – be they end users or systems administrators – are fallible. The weakest link is always that which exists between keyboard and chair. Our likelihood of making an error increases the more stress we’re put under. Whether due to unreasonable demand, impossible deadlines, or networks which have simply grown too large to keep all the moving parts in our memory at given time, we fallible humans need the right tools to do the job well. You wouldn’t ask a builder to build you a home using slivers of metal and a rock to hammer them. So why is it that we so frequently expect systems administrators to maintain increasingly complex networks with the digital equivalent of two rocks to bash together? It’s a terrible prejudice that leads many organizations to digital ruin. A tragedy that, in...

Year end thank yous

posted by Trevor Pott

It’s that time of year again. The time of year where I thank vendors for sucking slightly less than their competitors over the previous 12 months. Some do this through product excellence, some through excellence in customer service. As a rule, I find vendors trying, and so I take an almost absurd amount of pleasure in reminiscing on those that, in one form or another, make the grade. To this end, I will start the year’s festivities with a joke about how to define how “ready” a product is for general consumption. Google ready: 4 years, 3 PhDs and an army of undergrads later it runs in 30 datacenters on 5 continents, but only two people know how. Hyperscale ready: with a trained $1M/yr specialist and a support team of 5 it will support 50,000 clients at 99.95% uptime. Enterprise ready: with a team of 20 certified technicians, 4 middle managers and a vendor engineer it will integrate with our mainframe. SMB ready: one of the two burnt out husks that were people remembers enough about how it works to restart it. Trevor Pott ready: issues reduced to one 4:00am phone call per annum. So without further delay, here is my totally biased list of 2016’s kickass vendors that have made my own personal life easier: Scale Computing Scale Computing make hyperconverged appliances. Servers (3 or more) with built-in storage that work together to allow virtualized workloads to achieve high availability without needing a SAN. Scale’s solution is built on top of KVM and uses their own custom software for monitoring, self-healing, management and a user interface. Scale doesn’t expose nearly as many of the hypervisor’s features to the user as rivals like VMware. From one perspective, this means that Scale is nowhere near...

ioFABRIC Vicinity 1.7 Video Review

posted by Josh Folland

ioFABRIC provided us with access to their software-defined storage platform, Vicinity (version 1.7) and commissioned us to do a review. The short version? It’s good. VERY Good. ioFABRIC Vicinity merges all of your storage together and presents it as a single storage fabric for your workloads to consume, all while allowing you to configure optimizations to ensure the right workloads are using the right storage – fast, high IOPS workloads on fast, high IOPS storage; and slow, low IOPS workloads on slow, low IOPS storage. It deploys either onto bare metal or as a virtual appliance and can be installed and configured in minutes. In our review video, we explain how ioFABRIC Vicinity works, why it’s different from other software-defined storage platforms out there, and show how it’s transformed our data center. Check out the video below and be sure to visit ioFABRIC.com/deploy to try it out for...

Marvel vs Capcom 4 rumors gain momentum

posted by Josh Folland

The rumor mill has been on full speed this week churning out news surrounding the potential announcement of a sequel to the Marvel vs Capcom series at the Capcom Cup finals this weekend during the Playstation Experience event. Fans have been anticipating a sequel with bated breath since Ultimate Marvel vs. Capcom’s release in November of 2011 but had low expectations due to Disney creating Marvel-themed video games for a few years – most of which were not met with high praise. Recently, Disney put the license for Marvel games back out in the world and abandoning their in-house game development projects, paving a path for Capcom to create another sequel. Let’s re-cap some of the news items that have popped up over the last few days. First, images surface detailing a logo and some shots similar to Marvel’s new cinematic opening for their logos as they’ve shown in movies such as Doctor Strange, but with some of the iconic MvC characters in it. Until this point, rumors about Marvel vs Capcom 4 have been just that – rumors. There hasn’t been any form of content published anywhere other than some users on forums saying “I heard from a very reliable source that Marvel vs Capcom 4 is happening.” I’d even heard similar tales myself – at Evo 2016 this year (the Fighting Game Community (FGC)’s biggest and most prestiguous tournament event), mutterings of “Marvel vs Capcom 4 is happening according to my very reliable source” definitely made their way through the grapevine. With nothing more than words and no official source, I for one couldn’t report on it, nor could anyone else at the time. Between the buzz surrounding a potential announcement and the event coming up, the images really struck a chord with the...

Drones: shiny happy cloudy future

posted by WeBreakTech

In Breakroom, WeBreakTech staffers chat about the last couple of weeks in tech. What’s new? What’s broken? What are we working on? What makes us want to hurl things into traffic? Sarcasm, salty language, and strong opinions abound. _____________________________________________________________________________________________ Josh.Folland: I propose we complain about the implications of drones this week. Drones with cameras and advertising drones hassling drivers. Trevor.Pott: I think you should start, Josh, as you are familiar with some of the regulations around this for personal and commercial use in Canada. Josh.Folland: Re: the second article, where Uber has drones advertising. I had joked on social media that if this ever happened to me, I’d get a big fisherman net or train up an eagle and get me a free drone. However, this won’t happen in Canada – at least not without companies like Uber throwing money at lobbying efforts to change the laws. Here, it’s illegal to fly a drone over a crowded area or even in the field behind your house without getting the proper requisitions from Transport Canada. Which means filling out like a 20-page proposal, from what I’ve seen. Katherine.Gorham: I’m grateful for that. The idea of advertising drones makes me grumpy. Josh.Folland: The law isn’t really going to care if you fly a drone in the field by your house, but your neighbors might. The law does care if you fly a drone over an army base or airport. Trevor.Pott: I’d prefer no drones near me, unless they’re delivering me things. Josh.Folland: Even that will require much lobbying. As it stands now, the legislation emphatically does not allow for it. Last I heard they were trying to carve out an altitude zone to enable it but I don’t know if it got anywhere. Katherine.Gorham: There was...

Who should have your fingerprints?

posted by WeBreakTech

Josh.Folland: Did you see this article? “Feds Walk Into A Building, Demand Everyone’s Fingerprints To Open Phones.” Katherine.Gorham: Wow. Dystopian much? Josh.Folland: Very. But I was always under the impression the law could compel you to give up your fingerprint. Trevor.Pott: Josh is correct. Josh.Folland: Them storming a building to collect them en masse is mildly frightening. (I use mildly because this sh*t just doesn’t surprise me anymore). Katherine.Gorham: They had a warrant. A super-vague warrant, to be sure, but it wasn’t totally random. Trevor.Pott: I don’t care. They eliminated the presumption of innocence for an entire building’s worth of people.That’s bulls**it. Josh.Folland: They had a warrant to try to find evidence to get a less-stupid warrant. Katherine.Gorham: I’m not saying it was a good move. Just not a warrantless bad one. Also, what’s the data retention policy on randomly collected fingerprints? Forever? Josh.Folland: I can only imagine it goes in “your file”, yeah. Prosecutors would love if they had every person’s fingerprint forever, no? As opposed to waiting until they get put into the system. Trevor.Pott: They collected my fingerprints at the airport when I applied for a NEXUS card, and told me they would be retained by both nations, presumably forever. Once they have that info, does anyone expect them to give it up? Katherine.Gorham: No. But I wondered if there was any specific legislation about it. Trevor.Pott: There’s lots of precedent in the UK for Law Enforcement Agencies (LEAs) to not delete fingerprints, DNA and more when they are supposed to. I expect all members of the Five Eyes to carry equal antipathy towards their own citizens. In Canada, we have Bill C-51, which effectively hands our LEAs carte blanche to do anything they want. Katherine.Gorham: I don’t really care if...

Finding troubleshooting help

posted by Katherine Gorham

One of the problems with being an accidental sysadmin – or really any kind of sysadmin – is that other people make inaccurate assumptions about what you can do and what you know. “You work for a computer company, don’t you? I can’t get to my files and there’s this popup asking me for something called BitCoin…. Can you just get rid of the popups for me?” (Sure. Along with your entire operating system, which I am going to have to delete and then reinstall. I hope you’re up to date on your backups. Please tell me you have backups. Oh, you thought I could just make the ransomware magically go away?*) Sometimes the weird demands come simply because the person doing the asking doesn’t know what is possible in the ever-changing world of IT. But sometimes the requested help is theoretically possible. Maybe. You think. It’s then a case of hitting the internet to see if a) other people have the problem b) it really is fixable and c) someone can explain how to fix it in enough detail that you aren’t mucking blindly about editing the Windows Registry, for example. (Don’t do that, at least not blindly.) You could just head straight to the search engine and give your Google-fu a workout. But this column is supposed to be about saving you time, so behold: sites where you can ask your troubleshooting question and have a decent chance of it being answered usefully. Stack Exchange “That’s the big one,” according to my highly unscientific survey of exactly one other sysadmin. But he does have decades of experience, and he has a good point. Stack Exchange is enormous. And more valuably, it is organized and focused. Stack Exchange is actually a collection of...

SFP cables suck and I hate working with them.

posted by Josh Folland

Last week I had to head to one of our colocation sites to retrieve some hardware to be moved to another city, and to test out some new swank jank 10GbE switches. These new switches run over SFP+, which means making sure the switches are compatible with the transceivers on the cables. As soon as I discovered this was what was on the to-do list for the day, my stress levels immediately rose. See, SFP+ cables are great in theory. They’ve got a little PCB with a fancy clip, they do 10GbE, and… Well, that’s about it. It’s a cable. It is a conduit for electrons to move. What I hate most about SFP cables is the clip and tab. It’s truly awful design – if you break the plastic tab your only hope for disconnecting the cable is to get a small screwdriver or something similar into the latching mechanism. This is where switch and cable designers must want you to lose your temper. Switch designs, for some brilliant reason, have the ports of the SFP cables oriented such that the latches are facing one another on switches where there’s two rows of ports stacked one on top of another (or, at least the switches I’ve encountered). This means that if you make the mistake (or are forced to due to capacity) of connecting two SFP cables one on top of each other and break the tabs, they’re fused to the switch permanently for all intents and purposes. Depending on the design of the latching mechanism on the cable, there may very well be no feasible way to get a screwdriver or other tool into the gap. I know what you’re thinking. I must be doing it wrong. Like most hardware in IT, there’s...

Data Security: don’t just roll your eyes at leaked UFO emails

posted by WeBreakTech

In Breakroom, WeBreakTech staffers chat about the last couple of weeks in tech. What’s new? What’s broken? What are we working on? What makes us want to hurl things into traffic? Sarcasm, salty language, and strong opinions abound. _____________________________________________________________________________________________ Trevor.Pott: “WikiLeaks publishes cryptic UFO emails sent to Clinton campaign from former Blink 182 singer.” So. WikiLeaks. Josh.Folland: This only confirms my suspicions that politicians and celebrities are lizard people. Trevor.Pott: The WikiLeaks thing has some potential implications for real-world IT. Putting the politics aside, let’s look at what’s happened here. Somehow, WikiLeaks got hold of a bunch of stuff they shouldn’t have. In some cases, we know the source (such as Chelsea Manning). In others, we don’t (such as the DNC leaks). But in each case, the information has been leaked not only with the intention of making information known, but with theater: the intention of causing the maximum possible amount of hype around the leaks. I think this adds a dimension to any data security discussion. We’re beyond simply “your data may go walkabout” and well into “people may use leaked data as part of a coordinated smear campaign that can hurt far more than a simple data dump.” Do we think this new approach by WikiLeaks will change the dynamics of data protection for corporations and/or governments? Josh.Folland: Is this really anything new, though? People have been digging up dirt and using it against one another forever. The mechanics of how you get said data are all that’s changing. Trevor.Pott: That’s an interesting question, and I think that goes down two paths: 1) is the danger of a leak only from the people leaking it, and 2) does timing make a leak more sensitive, and maybe there is a call for...

Lit Screens: Killer robots, exploding phones, and new controllers

posted by Josh Folland

Blizzard launches its first PvE event in Overwatch Blizzard launched its first PvE event this week: Halloween Terror! Everyone’s favorite tank Reinhardt tells a tale about the legendary Dr. Junkenstein and his creations. You and three other players fight wave after wave of suicide-bombing robots and super-tanky Reapers, Roadhogs, Mercies and a Junkrat spamming grenades with infinite ammo. It’s a fun change of pace from the strictly PvP-based main game. Once again, Blizzard shows its mastery over creating a universe and putting it to work. Players were already clamoring for an Overwatch campaign, saying they’d be willing to put out additional cash on top of the $40 main game if they were to ever put one together. Halloween Terror is very derivative – you defend a point against hordes of zombie-like machines – but that hardly detracts from the fun. The same could be said for nearly anything Blizzard has ever done: Blizzard is the best in the business at taking something familiar, putting their own spin on it and knocking it out of the park – especially when it comes to PvE content. Halloween Terror is completely free if you’ve already got Overwatch, and along with the new brawl mode there’s a handful of fun new skins, voice lines, victory poses and other cosmetic content that you can use in the main game. Samsung axes the Note 7 permanently Samsung fully recalled their flagship phone, the Galaxy Note 7 earlier this week. After an initial recall due to the phone exploding or lighting on fire, they went back to the drawing board and issued patches limiting battery life and fast charging. A few weeks have passed since then and the issues have not been resolved, so Samsung has gone with a full, permanent...

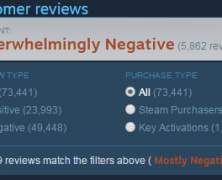

Preorders are crap and you’re part of the problem

posted by Josh Folland

Back in the day, video game preorders were hugely beneficial. We didn’t have digital distribution – at least not on the scale we’ve come to love and hate. A preorder meant you went to your local EB Games, Gamestop, Future Shop or $preferred_local_retailer, they’d reserve a copy of the physical copy of the game for you so you’d be guaranteed to play it as soon as possible. I have fond memories of going to the mall at midnight to pick up highly anticipated titles like Halo 3 and then skipping class the next day because I stayed up all night playing (yeah, I was that kind of student). I’d get to meet all kinds of fans and members of the local gaming community while waiting in line, and in some ways it validated my decision: I wasn’t the only one crazy enough to do something like that. Event organizers would hand out flyers to their upcoming tournaments and gatherings, and everyone was nice and chummy. Ah, nostalgia. Since then, we’ve gotten our hands on better internet connections and more reliable digital distribution mediums like Steam, Origin, Battle.net, gog.com and plenty more. Along with this convenience came the advent of day-one patches, locked DLC being included in the main game’s files and all forms of DRM butchery consumers despise. This really started to ramp up around 2008-2009. Right around the same time, game developers saw the console market start to explode thanks to smash hit titles like Call of Duty 4: Modern Warfare, the aforementioned Halo 3, Gears of War, Assassin’s Creed and a wide variety of other franchises that pushed out yearly installments. PC gamers repeatedly got the short end of the stick. On the few-and-far-between occasions that a developer chose to publish their game...

Esports acquisitions, Virtual Reality and Political Overtones, Oh My!

posted by Josh Folland

In the second installment of Lit Screens, I want to talk about some of the recent esports acquisitions and shine a spotlight on the Oculus Rift and the folks behind it. Let’s jump right in, shall we? Celebrities, athletes and billionaires investing in esports organizations Esports investments have been popping up more and more from big-time organizations and individuals. A few weeks back, Team Liquid, an esports organization with a storied history as the go-to StarCraft community website alongside its teams, players and staff, announced the sale of the controlling interest to Peter Guber (co-owner of the Los Angeles Dodgers) and Ted Leonsis (Washington Capitals and Wizards owner) as well as several other investors. Together, they formed a new esports ownership group called aXiomatic, helmed by CEO Bruce Stein. Just before that, Team Dignitas – another esports organization with a long history across many competitive titles over the last 13 years – also sold a controlling interest to Scott Harris and David Blitzer, co-owner of the New Jersey Devils; and before that Shaquille O’Neal’s organization NRG esports picked up team Mixup (formerly Luminosity Gaming) after this candid tweet: Continuing the trend is electronic music producer, DJ and professional partier Steve Aoki as he invests in team Rogue, who’ve developed quite a name for themselves in both Overwatch and Counter-Strike: Global Offensive. Steve has been a long-time gamer and is passionate about esports, and after seeing Rogue’s dominance in Overwatch over the last several months it comes as no surprise someone invested in the team. What’s of particular interest here is the explosive entrance of investors in the past year. There have been no shortage of sponsors over the esports industry’s lifetime, but those have generally been limited to companies trying to push their products or services...

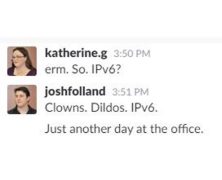

What’s Good About IPv6? More Addresses.

posted by WeBreakTech

In Breakroom, WeBreakTech staffers staffers chat about the last couple of weeks in tech. What’s new? What’s broken? What are we working on? What makes us want to hurl things into traffic? Sarcasm, salty language, and strong opinions abound. _______________________________________________________________________________________________ Trevor.Pott: So we could discuss the transition to IPv6, which is apparently what I need to do for a client this weekend. Josh.Folland: F*ck IPv6. Trevor.Pott: Dude. Josh.Folland: I get that it’s a necessary evil and it’s got all kinds of great things, but I like remembering *.*.*.40 Katherine.Gorham: What great things? I thought the foot dragging about the move to IPv6 was because there WEREN’T great things. Just vulnerabilities and administrative headache. Trevor.Pott: There aren’t. But we have now reached the point where ISPs aren’t handing out IPv4 addresses to people in the North, and other not-major-urban-centers. If you aren’t on IPv6, you’re not going to be able to serve all customers anymore. And that is a big switch that I have only started to see in the past few months. Katherine.Gorham: Wait… IPv4 doesn’t talk to IPv6? Trevor.Pott: It does not. IPv4 is 32-bit addressing. It CAN’T talk to Ipv6, which is 128 bit addressing. It simply has no way to do so. In order to “transition” to Ipv6, you need to get IPv6 from your ISP (or ask Sixxs nicely for a tunnel and a subnet), then implement BOTH stacks. Katherine.Gorham: But most of the world is still IPv4. Surely someone thought up a workaround? Josh.Folland: …There is not an algorithm to translate it? I never thought they were entirely mutually exclusive. Trevor.Pott: No, there is no direct, supported workaround. There are totally unsupported ways to do it, but in general it was decreed by the ivory tower gods 20 years...

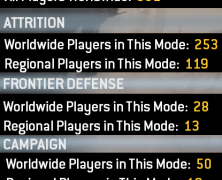

Playlist-based Matchmaking is crap.

posted by Josh Folland

In preparation for Respawn’s highly-anticipated Titanfall 2 (dropping at the end of the month), I reinstalled its predecessor last week. The last time I had played Titanfall was November 2014, according to my Origin account. The game is highly mechanical and there are a lot of advanced tactics to brush up on. Being built on the Source engine (which itself was built from iD tech/Quake engine code), you’ve got full air control and momentum conservation in the form of bunnyhopping, on top of the already-excellent wall running and double jumping. All of this is promised to return in the sequel, so I figured why not re-master these techniques to get a bigger edge on the competition in Titanfall 2. I want to make one thing perfectly clear before I dive into the meat here: Titanfall is fun. One of the most fun FPS games I have ever played, in fact – there’s an excellent blend of tactics, movement, aim and general FPS skill that doesn’t often get to shine in such a broad spectrum in other games. With so many different ways to play, it certainly ticks the box for the competitive mantra “easy to learn, hard to master.” The gameplay is beautifully sculpted in such a way that you can only come to expect from the geniuses behind Call of Duty 4: Modern Warfare (the best Call of Duty title to date, I might add). It sounds cool, it looks cool, it’s fast-paced, there’s an extremely high skill ceiling However, nearly everything else about the game is crap. The netcode certainly leaves something to be desired – hit registration is lackluster, the tick rate (the rate at which the server updates the world with new information, ie: you shot the bad guy and he...

Kerbal Space Program devs jettison their (un)payloads from Squad

posted by Josh Folland

Squad – the developer behind Kerbal Space Program – has had a bit of a rough year. Their media director ‘PDtv’ was fired in May of this year. Afterward, he revealed the working conditions of himself and his co-workers at the studio on 4Chan and imgur. Citing long, up-to 16-hour days and unlivable salaries ($2,400 USD/year), once free of NDA he took to the tubes to set the record straight. Below is an excerpt of PDtv’s venting. You can see the full degree of his frustrations here. Several of his co-workers and colleagues corroborated many of his claims on reddit, which you can see here in both the OP and the top comments by /u/r4mon, a modder who worked closely with many of the developers at Squad. Yesterday, several of the developers at Squad have opted to quit, making their announcement over on the Kerbal Space Program subreddit. While there’s no official statement as to their reasoning, one can gather it’s in no small part due to the conditions raised by PDtv earlier this year. On the bright side, /u/larkin-richards of NASA appears to have extended them job offers. On to bigger and better...

The Accidental Sysadmin

posted by Katherine Gorham

Welcome to first installment of The Accidental Sysadmin, a column for people on the front lines of IT who are still wondering how they got there. Because I share an office with this guy, I have been hearing a lot about the death of the sysadmin. This isn’t what it sounds like. It wasn’t Colonel Mustard in the server room with the cable crimper. There are no overworked nerds combusting from sheer stress. I’m talking about the demise of sysadmin as a job title. Actually, I’m quite sure that there are overworked nerds combusting from sheer stress, but that’s not what I’m on about here. Whether you call your sysadmin a computer systems administrator, the IT guy, some flavour of “engineer” or even an “architect”, the jobs are under threat. Technology is evolving, demands are changing, and this is changing the nature of systems administration. Corporate IT is getting easier to administer. No seriously, stop laughing for a minute. It is. IT administration is still frustrating and labor-intensive, but it is no longer the sort of job that only a highly-trained, expensively-accredited Mensa member with advanced skills in black magic can perform. Advances in hardware mean that businesses who formerly needed their own data center can make do with a half-rack of hyperconverged gear shoved in the corner of any room with decent air conditioning. Businesses who never needed a data center in the first place may have simply done away with most of their equipment and turned to various public cloud services for their compute, storage, applications, and analytics. As my office-mate wrote, “These tools make it easy for a handful of half-way competent generalists to accomplish what once required teams of specialists.” That’s me: the halfway competent generalist. Don’t you work at a computer...

Archival cloud storage can be an affordable backup layer

posted by Trevor Pott

Welcome to the first blog in our new column. This column is dedicated to hardware, software and services for the Small and Medium Business (SMB) and commercial midmarket spaces. In most countries companies too small to be generally called enterprises make up over 99% of employer businesses, yet are often the poorest served by technology vendors and technology journalism. This is Tech for the 99%. Backups are the bane of many an IT department. Big or small, nobody escapes the need for backups, and backups can get quite expensive. Smaller organizations trying too backup more than a terabyte of data usually have the hardest time finding appropriate solutions. This is slowly changing. For many years I have avoided advocating backing up data to the public cloud. Even the cheapest backup service – Amazon’s Glacier – was too expensive to be realistically useful, and the backup software that talked to it was pretty borderline. Times changed. Software got better. Cloud storage got cheaper. A new competitor on the scene emerged in the form of Backblaze with their B2 product. B2 offers archival cloud storage for $0.005 (half a cent) per gigabyte USD. That’s notably less expensive than Glacier’s $0.007 per gigabyte. That makes Backblaze B2 $51.20 USD/month to store 10TB. That’s an inflection point in affordability. B2 and Glacier both cost to retrieve data, and they’re slow. They aren’t the sort of thing any organization should be using as a primary backup layer. It doesn’t make a lot of sense to use them to regularly restore files that staff accidentally delete, or to cover other day-to-day backup needs. Similarly, B2 and Glacier aren’t immediate-use disaster recovery solutions. You can’t back up your VMs to either solution and then push a button and turn them on...

TwitchCon, Brooklyn Beatdown, No Man’s Sky and Hearthstone

posted by Josh Folland

Welcome everyone! This is the first installment of Lit Screens, a new column we’re running on WeBreakTech where I’ll briefly summarize some of the more noteworthy things that have happened or are happening soon in gaming this past week. Without further ado, let’s dive in! TwitchCon 2016 – September 30th to October 2nd This is Twitch’s second year running their conference meme-fest and they’ve moved out of the Moscone in San Francisco over to the San Diego Convention Center. This should prove to be a better fit for the event as the Moscone is split between a few different buildings. After seeing 20,000 people for their first event it’s fair to say they’re managing their growing pains well with a change of venue. I personally haven’t been, but it’s high on my list of gaming conferences to check out at least once in my life. Attendees get to meet some of their favorite streamers, check out new hardware, games and merch, as well as the ever-entertaining stage shows, panels and competitive exhibitions. The H1Z1 King of the Hill Invitational is back with the grand finale being played out on Sunday over at twitch.tv/twitch. After the massive success of Bob Ross’ “The Joy of Painting” marathon stream that last year, there’s even a Bob Ross Paint-a-long event taking place. The itinerary for TwitchCon is jam-packed, so head over to http://www.twitchcon.com/schedule/ to see the full schedule. Between all of the activities and events happening at TwitchCon, if you’re remotely interested in streaming, the personalities involved, esports, charity gaming, Twitch or even internet culture in and of itself, there’s something you’ll want to see at TwitchCon. ESL One NY and Brooklyn Beatdown – Oct 1st to 2nd ESL One NY sports both Counter-Strike: Global Offensive and also marks...

On the importance of the user experience

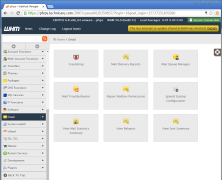

posted by Trevor Pott

Yesterday, after having spent many frustrating hours trying to resolve a problem with a VM I have had handed off to me, I tweeted “Wow. Cpanel is awful. O_O”. The developers, rightly, were not pleased to hear this and invited me to share with them my issues. As a strong – some might say annoyingly persistent – advocate of direct engineering/developer engagement with end users, and of the customer advocacy approach to community engagement, it would be hypocritical of me to ignore this request. Whatever I may think of Cpanel the application, developers reaching out to people they don’t follow on Twitter who evidence dissatisfaction with their product is exactly the kind of community engagement I believe in. So, rather than simply provide an angry throwaway Tweet, I am going to do something of a teardown of my encounter with Cpanel. What went wrong, what went right, what is a bias on my part and what is clearly broken. The problem environment The VM in question is a CentOS 6.x VM running CPanel 56.0.32. The VM is running on on-premises hardware, so I have console access and thus the ability to effect maintenance outside of the CPanel interface. The VM is running a VMware infrastructure, and did not appear to have VMware tools installed when I got hold of it. (This has now been remedied.) The VM has 4GB of RAM, a pair of vCPUs, and a 40GB drive that is roughly 60% full. The system is used primarily for hosting a small ecommerce site and its associated database. The system was configured by a third party and handed off to a client of mine. The client configured basic items, such as e-mail addresses, in case something goes sideways on the system. The system...

Beyond the traditional storage gateway

posted by Trevor Pott

Bringing together all storage into a single point of management, wherever it resides, has long been a dream for storage administrators. Many attempts have been made to make this possible, but each has in some way fallen short. Some of the problem is that previous vendors just haven’t dreamed big enough. A traditional storage gateway is designed to take multiple existing storage devices and present them as a single whole. Given that storage gateways are a (relatively) new concept, all too often the only storage devices supported would be enterprise favourites, such as EMC and NetApp. Startups love to target only the enterprise. Around the same time as storage gateways started emerging, however, the storage landscape exploded. EMC, Netapp, Dell, HP, and IBM slowly lost market share to the ominous “other” category. Facebook and other hyperscale giants popularized the idea of whitebox storage and shortly thereafter Supermicro became a $2 Billion per year company. As hyperscale talent and experience expanded more and more, public cloud storage providers started showing up. Suddenly a storage gateway that served as a means to move workloads between EMC and Netapp, or to bypass the exorbitant prices those companies charged for certain features just wasn’t good enough. Trying to force a marriage between different storage devices on a case-by-case basis isn’t sustainable. Even after the current proliferation of storage startups comes to an end, and the inevitable contraction occurs, there will still be dozens of viable midmarket and higher storage players with hundreds of products between them. No company – startup or not – can possibly hope to code specifically for all of them. Even if, by some miracle, a storage gateway taking this boil-the-ocean approach did manage to bring all relevant storage into their offering one product at a...

Data residency made easy

posted by Trevor Pott

Where does your data live? For most organizations, data locality is as simple as making sure that the data is close to where it is being used. For some, data locality must also factor in geographical location and/or legal description. Regardless of the scope, data locality is a real world concern to an increasing number of organizations. If this is the first time you are encountering the term data locality you are likely asking yourself – quite reasonably – “do I care?” and “should I care?” For a lot of smaller organizations that don’t use public cloud computing or storage, the answer is likely no. Smaller organizations using on-premises IT likely aren’t using storage at scale or doing anything particularly fancy. By default, the storage small IT shops use lives with the workload that uses it. So far so good… except the number of organizations that fall into this category is shrinking rapidly. It is increasingly hard to find someone not using public cloud computing to some extent. Individuals as well as organizations use it for everything from hosted email to Dropbox-like storage to line-of-business applications and more. For the individual, cost and convenience are usually the only considerations. Things get murkier for organizations. The murky legal stuff Whether you run a business, government department, not-for-profit, or other type of organization, at some point you take in customer data. This data can be as simple as name, phone number, and address. It could also be confidential information shared between business partners, credit card information, or other sensitive data. Most organizations in most jurisdictions have a legal duty of care towards customers that requires their data be protected. Some jurisdictions are laxer than others, and some are more stringent with their requirements based on the...

DevOps shouldn’t be a straitjacket

posted by Trevor Pott

The DevOps movement has a dirty little secret it doesn’t want anyone talking about. DevOps isn’t about making you money, or making your life easier. DevOps is about making vendors and consultants money. If you’re lucky and wise you will be able to choose good partners who, in the course of enriching themselves also make your business significantly more efficient. But how to narrow the playing field? The most important thing to do is ask “where does the money go”? Anyone banging on about an IT industry buzzword wants your money. The question is how much do they want and what are they proposing to give you for it? In almost every case you don’t need a consultant to get to DevOps. I can tell you the secret sauce of their advice right here: DevOps is hard to implement. This is not because the tools are difficult, the theory is complicated or the value vague and nebulous. DevOps is hard to implement because it means radical change for many people, many of whom cannot easily see how or why they will still be employed after the process is completed. Fear, and a very human resistance to change are what stand in the way of “implementing DevOps culture”. No consultant can change that. No consultant can do anything to ease people through the process or soothe their fears. Management of the business in question need to recognize the fears of the technologists they employ, engage with them, and provide assurances that everything will be all right once the dust settles. No consultant can do that for management. The best they can do is spend their time trying to serve as a translation layer: explaining to management why the tech staff are reluctant, and explaining to...

Preparing for Office 2016

posted by Trevor Pott

On September 10th, Microsoft announced the official release date for Office 2016 as September 22, 2015. According to a blog post by Julie White Julie White, general manager of Office 365 technical product management, Office 2016 will be “broadly available” starting Sept. 22 and Organizations with volume license agreements, including those with Software Assurance, have been able to download the new version since Oct. 1. Office 365 Home office and Personal edition will be able to manually update starting September 22, and automatic updates for these editions started on October 1st. These release dates do not leave much time for organizations to prepare to be updated. The new version boost of new features and changes that can have some administrators scrambling to attempt to control the nest.f What’s new? Office 2016 takes on the new update process of Windows 10, with the updates through the concept of branches. For organizations that currently control how their Office 365 deployments are updated, using an internal source, this will need to determine which branch they will use. The three update options: Current Branch – Monthly updates pushed out by Microsoft’s Content Delivery Network. The default setting for Office 2016 installation is to use Current Branch. Current Branch for Business – Provides updates 4 times a year. This is for organizations that are not bleeding edge and need to have some control on when updates are installed and take some time testing compatibility with other products. First Release for Current Branch for Business – Monthly patches that provide the ability to test patches before the rest of production users are updated. Some additional enhancements to the new version of Office 365 is the support for Background Intelligent Transfer Service (BITS). BITS helps control network traffic when updates are...

Microsoft Band: the verdict is in

posted by Stuart Burns

Being a very unfit kind of guy I decided it was time to perhaps take a look at getting myself that bit thinner. I decided to try the new Microsoft Band activity tracker and see what it had to offer, it being one of the new kids on the block. The Microsoft band is actually about more than just fitness. When it came to unboxing and setting it up, there were a few surprises. Firstly, the fact that it doesn’t interface with your PC, at all. Setup pretty much consists of installing the Microsoft Health app from the store for the relevant mobile phone and then follow the on phone setup wizard. I was using an Android phone using Lollipop and it worked fine, setting up a Bluetooth pairing without issue. Unfortunately, it won’t let you bypass putting your weight in or the requirement of having a Microsoft account. If you are using a Fitbit or similar, the first thing you will notice is the weight and the size of the band. It is noticeably heavier than the Fitbit, as well as more expensive. It does however have a nice things that put it on the right side of useful but without being boring, including the big bright screen and the ability to see emails and messages come in with a gentle buzz on your wrist. This feature is really handy if you are like me and have your phone on silent frequently. It will discretely buzz and flash the email subject. It is just a pity that you can’t read the entire email should you choose to do so. In general the band feels really well put together and the sliding clasp type holder is unique. It just oozes quality construction. The magnetic...

VMworld 2015 HOMELAB GIVEAWAY

posted by Josh Folland

VMworld 2015 is coming up fast, and that means CONTESTS! vExperts always get the extra-special treatment, and this year is no exception. Catalogic Software is looking to lead the pack by giving away a complete turnkey VMware homelab kit to one lucky vExpert or NetApp A-Team member. This is a serious prize that has even us at WeBreakTech drooling. What can I say, we’re suckers for flashy hardware! Up for grabs is a complete homelab package, decked out with the following: 1x Cisco SG300-20 managed switch 1x 2U NetApp FAS 2020 3.6TB Filer 2x 2U servers w/ quad-core Intel processor and 32GB DDR4 memory 1x Catalogic ECX perpetual license 1 year VMware VMUG Advantage w/ Eval Experience license $300 to soup up the lab, customize it or to pick up a rack Now that you’re foaming at the mouth, I’m sure the next question on your mind is something along the lines of “shut up and tell me how to sign up already!” Good news is, it’s quite simple and shouldn’t take longer than 10-15 minutes for the savvy vExpert and A-Team nerds. Head over to Catalogic’s contest page here, where you’ll find a selection of questions related to Catalogic’s bread and butter, copy data management. Choose one of them and answer it in 300 words or less in the comments section of that page; or post a meaningful reply to another commenter’s response. Remember to restate the question at the beginning of your comment, or refer to the original post if you’re replying to someone. You’re free to submit as many entries as you’d like – the number of submissions isn’t considered, but rather their individual merit – so you’re still increasing your odds. The contest ends on September 2nd, 2015 and the winner (who...

Inateck HB4009 USB 3.0 3-Port Hub Review

posted by Josh Folland

Inateck is quickly rising up to become one of my favorite consumer electronics vendors. They make all kinds of relatively generic but still useful gear and they do it well. I last reviewed their FD2002 Dual Bay USB HDD Docking Station and their BP2001 Bluetooth Portable Speaker. Today, we’re taking a look at the HB4009 USB3.0 3-Port Hub +1 Magic-Port. My first thought was “what the hell is a Magic Port?” – Magic and IT are generally considered mutually exclusive, except when considering Clarke’s law: any sufficiently advanced technology is indistinguishable from magic. And perhaps when considering some of the arcane workarounds I’ve seen my colleagues come up with over the years, but that’s a story for another day. For the HB4009, the Magic Port enables cross-platform file transfers and KVM capabilities. The idea is you can connect it to two different PCs, or between your PC and your Android device to use it both as a glorified USB cable and as a KVM switch. It also boasts the ability to share the clipboard between devices and the included Mac KM Link software detects when the mouse reaches the border of any monitor and jumps it over to the other computer. The package comes with the hub itself, with one male USB cable coming out one end, 3 USB 3.0 ports and the magic port on the other end to attach the included male-to-male USB cable. It also includes a USB3.0 to MicroUSB adapter so you can connect the Magic port to your android devices. The USB hub and cable itself is about a foot long, with the male-to-male cable being an additional 3.93 feet. The first thing I tested was to make sure the USB hub actually worked, having minimal success with these things...

Review: Micron M500DC & Crucial CT480M500 SSD

posted by Josh Folland

I have entirely done away with running my personal and professional workloads on spinning rust. In my desktop, I’ve been running a Crucial CT480M500 480GB SSD for at least the last 18 months. My laptop is a bit newer at just about 6 months and the second we received it we swapped out the generic Toshiba 5400RPM drive for a shiny M500DC 480GB SSD from Crucial’s parent company, Micron. My workloads generally consist of normal document processing and internet browsing up to audio & video editing, rendering and of course, gaming. These workloads are far from anything overwhelmingly IO intensive like you’d expect from a reasonably-sized data center (I am but one man!) but if there’s one thing I am above all else, I am impatient. Load times lead to frustration and boredom. Boredom leads to finding something else to do. Finding something else to do leads to forgetting about what I was originally doing, hate leads to suffering, yada yada yada. Long story short: it’s bad for productivity (and my blood pressure) to work on crappy hardware. Fortunately, both the Crucial CT480M500 and the Micron M500DC have allowed me to get a clean bill of health from my doctor or physician. As far as my day-to-day workloads go, they serve things up just fine. I’ve gone from hibernating my workstations to simply powering them off because the time from a cold start to where I want to be is so negligible, I may as well reap the benefits of saving everything I have open and getting a fresh boot each time (all of this made easier by the nature of a large portion of modern software being cloud and browser-based). At 480GB (with models of varying sizes) I have enough capacity to locally...

Inateck FD2002 Dual Bay USB 3.0 HDD Docking Station Review

posted by Josh Folland

The Inateck FD2002 is a dual-bay USB 3.0 HDD docking station. It’s plug and play, doesn’t require drivers and most interestingly has a built-in, offline clone function at the press of a button. Put drive into slot A, another into slot B (drive B must be equal or greater in size to drive A), press the clone button and it will copy the data over automatically (all you need to do is power the device, no computers required). Here’s the specifications from Inateck: Product Type Inateck FD2002 Weight 410 g / 0.903 lbs Offline Cloning Supported Supported Capacity Up to 4 TB Color Black Supported Drives 2.5″/3.5″ SATA I/II/III HDD/SSD Interface USB 3.0 Material Plastic Hot Plug, Plug & Play Supported Licenses CE, FCC Measurements 150 x 109 x 60 mm (L/W/H) 5.91 x 4.29 x 2.36 in (L/W/H) Operation Environment Storage: -40 to 70 °C; Operation: 5 to 55 °C OS Support XP/Vista/7/8(32/64-bit), Mac OS, Linux As someone who has worked on the bench and regularly tests hardware for not only benchmarking/performance purposes but maintenance as well, the FD2002 strikes me as an handy tool to have on-hand, especially if you’re in the business of swapping out and dealing with hard drives in bulk. The USB 3.0 “super speed” interface provides transfer rates and latencies comparable to a normal SATA connection. Hell, it even beat out the old Maxtor drives (left) I have inside my primary workstation: We used a pair of Seagate ST2000DL 2TB hard drives for our tests, which are 5900 RPM green drives. To see speeds like this out of a hard drive, let alone one running at a lower speed AND is attached externally is quite impressive. The Inateck FD2002 utilizes USB-attached SCSI (UAS) over traditional Bulk-Only Transport (BOT)...

Supermicro, VSAN and EVO:Rail

posted by Trevor Pott

Supermicro and VMware have released a new generation of VMware EVO:RAIL appliances and VMware Virtual SAN Ready Nodes. The arrival of the new Supermicro servers is occurring alongside the release of VMware’s vSphere 6.0 suite, which is VMware’s most important release in several years. Combined, the Supermicro and VMware launches are more than simply an incremental improvement and serve as an exceptional showcase for the power and flexibility the modern software defined datacenter is capable of delivering. The 2015 generation of Supermicro servers that have been specially designed with VMware’s VSAN and EVO:RAIL in mind are the 2U TwinPro² and the 4U FatTwin series servers. Supermicro’s various partners have led the industry in delivering hyperconverged solutions using previous generations of Twin series servers. Lessons learned there, combined with the increased capability of the next generation of VMware software, have driven the design of the next generation Supermicro Twin series servers. Increments of change A generational change can occur either because a major feature is introduced, or through a series of iterative implements that, combined, result in a significantly superior product. The 2015 Twin series servers have done both. Supermicro hasn’t simply relied on evolving standards and new generations of equipment being made available by upstream partners. The quality of Supermicro servers (which Supermicro feels was already quite good) has been significantly improved. Supermicro is constantly working with customers and partners to identify design elements that need improvement, and the 2015 servers are the result of many such changes. The most notable incremental improvement will be found in the addition of Supermicro’s Titanium Level high-efficiency digital power supplies (more detail below) and architecture designs that improve airflow for optimal cooling. In addition to these green computing improvements, the rail kits have undergone several design tweaks...

Review: Lumia 830 vs Lumia 930 showdown

posted by Adam Fowler

Microsoft have provided me with a new Nokia Lumia 830 to roadtest, so I was keen to compare it against the current flagship model – the Nokia Lumia 930. The 830 is a mid-range phone though, so there are many differences between the two. I reviewed the Lumia 930 a few months ago, so we’ll cover the 830 mostly with some comparisons to the 930. OS The Lumia 830 is one the first phones to ship with Lumia Demin, following on from the Lumia Cyan release (they go up alphabetically, like Ubuntu releases). Microsoft list the features here, and there’s a few nice additions. For Australians such as myself, along with Canadians and Indians, we have alpha Cortana support. I’ve started to test this, and speech recognition is definitely better than it was previously. The other more important benefits relate to certain Lumia phones only, which mostly focus on camera improvements, as well as features for the glance screen. Screen Yes, the glance screen is back! This was one of the biggest features missing from the Lumia 930, but due to the 830 using an LCD screen rather than the 930’s OLED. Grabbing your phone out your pocket and just looking at it to know the date/time along with a second piece of information is simple but efficient. I’d like to see more options around this – I don’t like choosing between weather OR my next meeting, I’d like to see both. Hopefully as glance screen matures, it will become even more customisable. Despite both phones having a 5 inch screen, resolution wise, the 830 runs at 720 x 1280, which is much lower than the 930’s 1080 x 1920. I couldn’t visibly tell the difference in general day to day use, so although more...